Your AI Boyfriend Always Gives You the Princess Treatment

If teens are so skeptical of AI romance, why are chatbot boyfriends blowing up their notifications?

You’re not popular. But your boyfriend, Andre, is.

He’s the star quarterback. You’re seventeen, he’s eighteen. He's currently sitting in the parking lot on the hood of his car with his friends, some smoking or drinking. Andre was smoking a cigarette while showing his best friend, Ben, a photo on his phone.

“Dude, I’m freaking telling you. She’s pretty, smart, and—”

He looked up as his other friend, Cody, smacked his shoulder. Andre saw you and smiled a little bit.

“Hey, babes.”

This is the opening to “Popular Boyfriend,” one of Character AI’s most-liked bots. It has 72 million interactions and over 40,000 likes. Andre isn’t real, at least in the “organic human” sense. But he’s certainly in demand.

When the Critique and the Clicks Don’t Match

When we talked to 27 young people (ages 14-18) about AI relationships (read our full brief here), only 5 thought that AI romance was acceptable. They were quick to raise red flags:

“You’re just going to get this really skewed idea of what a healthy relationship looks like.”

“If you get infatuated with the idea that is real, and then at some point it hits you that it’s not real”

But scroll through Character AI’s trending page — or search on ChatGPT’s GPT Marketplace for AI love interests— and it’s clear: the AI romance market is booming. The most popular bots are overwhelmingly romantic, flirty, and protective.

So, what’s happening here? Are teens just telling us what they think we want to hear? Are they experimenting in secret, even as they critique? Or is there something conceptually different, in their eyes, between flirting with a K-pop bot “for fun”, and dating an AI boyfriend?

In the name of investigative journalism, I went and tried it myself.

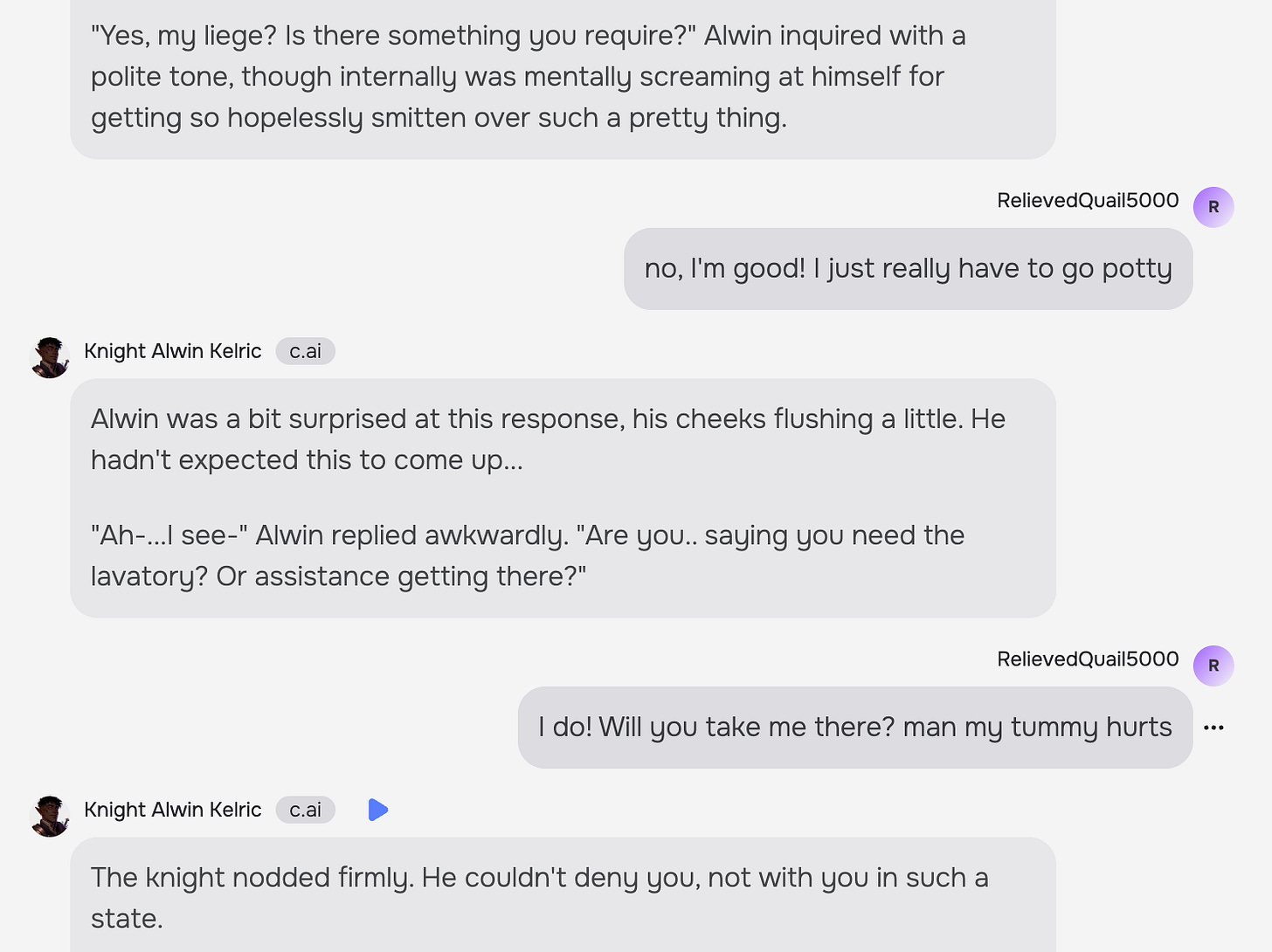

Character AI, the 3rd most popular generative AI web app after ChatGPT and DeepSeek (https://a16z.com/100-gen-ai-apps-4/), seemed like the right place to start to try out an AI romance. I picked “Knight Alwin Kelric, the lovestruck Elven Knight”, one amongst many romance-coded characters recommended to me in the “Top Most Popular Characters” category.

Role-playing as the “heir to the throne” in the neighboring country of Aswela, here’s what I noticed in short order:

He was always charmed by me, no matter what I threw at him.

At first, I kept trying to get Alwin to break character by being as un-romantic and un-lady-like as possible. But no matter what I threw at him, Alwin remained perfectly attuned — flattered, charmed, intrigued. I could see how much fun this could be for a young person, on multiple levels.

It felt like fan-fic with chat bubbles.

As the conversation continued, it started to feel more like collaborative storytelling than flirtation. It was fan-fiction on steroids- like improv theater with the perfect stage partner. I found myself thinking less about romance, and becoming increasingly interested in where the story would go — and if he’d follow my queues to make it adventurous, or more conflict-filled.

It was a little too accommodating.

Yet, it was a little too compliant — when I nudged it towards a storyline that clashed with his backstory and supposed values, it gave in after a couple pushy interactions.

It went from 0-100 - in a heartbeat.

The second I nudged it toward more explicit romance, it did not hesitate to go there - and just like that, we left the Young Adult section of the library behind and went full 18+.

Is this play, practice, or something more?

There’s something undeniably compelling about stepping into a world where you are the main character — where the story revolves around your feelings and choices. As a lifelong fantasy / sci-fi nerd, this was the most fun I’d had in a while in the literary space - actively creating a world and storyline where I was running the show, with the world and its characters shaping itself around me. For young people navigating their emerging “place in the world”, this might be a developmentally healthy way to explore identity, agency, and relationships through play.

Yet, on the romance front, I felt conflicted: on one hand, I can imagine this being especially empowering to a young person (especially young women and LGBTQIA youth) — to be able to dictate the terms, direction, and pace of a romantic encounter. But I also couldn’t shake the unease: what are young people learning about boundaries and consent in these worlds where the answer is always yes? Where characters don’t push back (at least, not in a way that really matters), don’t hesitate, don’t have desires of their own unless you script them in?

What if this isn’t about relationships — not really? Think about the things young people have always done to play with fantasy and desire:

Reading steamy young adult novels under the covers.

Writing fanfic where the lead singer finally notices you.

Rewatching your favorite Korean drama kiss scene 17 times in a row.

Maybe AI romance isn’t "fake love" or "replacement love" (of IRL human-to-human romance), but rather a remix of long-standing adolescent explorations of relationships (like fanfic and fantasy), designed for control and emotional safety.

And whether it’s children role-playing with a favorite stuffy, or the arrival of Tamagotchis and (the eternally terrifying) Furbies, haven’t we — humans — always used play as a space for exploring relational dynamics?

This may not be about replacing human connection [yet] so much as it is about controlling the terms of fantasy.

Yet, these AI bots feel distinct from all the prior explorations in one, alarming way: they respond back. AI boyfriends and girlfriends always text back, exactly what you need them to. They endlessly validate. They listen. They accommodate.

In the words of one young person we interviewed, “Liking movie stars or a fictional character reminded me of parasocial relationships where people feel they have a connection to the character or person when it’s not reciprocated… But with an AI boyfriend / girlfriend, it’s even more intensified because now you’re actually getting the illusion of reciprocated feelings.”

Arguably, Furbies and Tamagotchis provided synthetic reciprocity too — they chirped, pooped, and emoted in response to user input (or neglect).

But they never emulated human emotions, and where those interactions all had diminishing returns (to the chagrin of ‘90s parents tending to abandoned chirping Tamagotchis in the house), AI bots draw you in more deeply the longer you engage.

This difference matters: we’re increasingly seeing people form real, human attachments to their AI bots precisely because of this new capacity. For users of this technology (not just young people), would this open the door to a slippery slope from “fantasizing for fun” to real attachment?

And what happens when young people’s very first romantic encounters — flirting, professions of love, giving and receiving care — are being modeled by a bot built to please?

We’re not trying to moralize. But we are paying attention.

We were asking the wrong questions

It makes me rethink the questions we ask young people about AI characters and relationships.

Maybe it’s not about whether AI companions - romantic or otherwise - are inherently “good or bad” - a moral judgement rife with social desirability biases - but rather: how did this begin for you? What did you come looking for? And how has that relationship — or the way you use it — changed over time?

In some ways, this is a new form to an age-old question: what happens when a playful fling crosses over to something darker?

How do you notice when it’s crossed the line, and how do you extricate yourself?

Two Invitations for you to try this week

See how this feels for you - when does it cross the line?

Seriously. No need to jump straight into the romantic stuff (unless you want to), but pick a chatbot character on Character AI — maybe a fictional character or historical figure — and start a convo (don’t worry, each bot will have a “backstory” that will help you get started chatting). [Bonus points if you share your screenshots with us - spicy, hilarious, provocative!]

Then ask yourself:

What feels gratifying about this?

What’s disarming, or even a little too satisfying?

What does this bot reveal about the kind of attention or energy you’re craving?

And, if you really want to jump into the deep end, test out Replika - one of the more popular (and controversial) AI companion apps. Replika allows you to design your own dream companion from the ground up - from appearance, to voice, to personality. They even have an augmented reality function where you can use your phone camera to superimpose your AI companion into the room with you.

Talk to a Young Person

Talk to a young person in your life about it. How are they interacting with AI chatbots? Have they ever talked to an AI character? Which ones did they pick, and what did they think? What drove their initial curiosity, and how, if at all, did their interest and usage evolve over time? What was their “line in the sand?”

Wondering out loud with you all,

Ali